Venture Studio AI Maturity Scorecard – Test Your Readiness, Upgrade Your Playbooks

Jul 27, 2025

Summary and Key Points:

-

Why This Matters: AI startups got 53% of all VC money in early 2025. Studios without AI plans will fall behind fast - both in taking new ideas to market and in raising new funds.

-

How to Measure: Seven areas to score your studio—from strategy and team skills to data systems and results.

-

Where You Stand: Compare your current studio to a theoretical maximum - that a few studios (like Pioneer Square Labs, Builders, AI Fund, Alphanome, and Humanoidz) are already approaching.

-

Start Today: Complete the assessment in 10 minutes, then turn it into your 90-day plan.

Introduction — Why AI Matters Now for Venture Studios

Venture capital has already decided: AI is no longer a nice-to-have; it is the foundation. In the first half of 2025, AI startups attracted 53 percent of all global VC dollars. LPs are demanding clear AI strategies, while AI-native studios are setting new standards for what "credible" looks like.

Pioneer Square Labs builds generative AI into every concept from day zero. Builders Studio positions AI as its default platform for enterprise software companies. Andrew Ng's AI Fund converts frontier research into defensible IP at speed. And emerging studios like Alphanome AI and Humanoidz use autonomous agent swarms to spin up businesses in weeks—not months.

Yet this same speed brings serious risk. McDonald's AI bot exposed millions of applicant records. Replit's coding agent wiped a live production database. And privacy-focused Tea App made recent headlines when 72,000 verification images leaked. These incidents prove that rushing AI into production without proper systems is more dangerous than waiting. For venture studios managing dozens of experiments with shared data and brand promise, the stakes are even higher.

Venture studios face an extra twist. You’re not shipping one product, but dozens of experiments, probably including shared data, a talent bench, and a brand promise. That calls for a pragmatic maturity model purpose-built for the venture-building context.

I'm Attila Szigeti, and I've spent the last decade building and scaling venture studios. This journey included transforming a 12-person agency into a 50+ studio with 20+ portfolio companies. As an advisor to studios since 2018, I've seen how the right systems separate thriving studios from expensive experiments. My latest research, documented in the book Venture Speed, analyzes dozens of studio case studies to reveal what works. When founders want to start their unique, custom-built studios or debug an existing one, they call me.

Welcome to the Venture Studio AI Maturity Scorecard v1 - based on my research and real-life cases. Time to find out where you stand and how fast you can move.

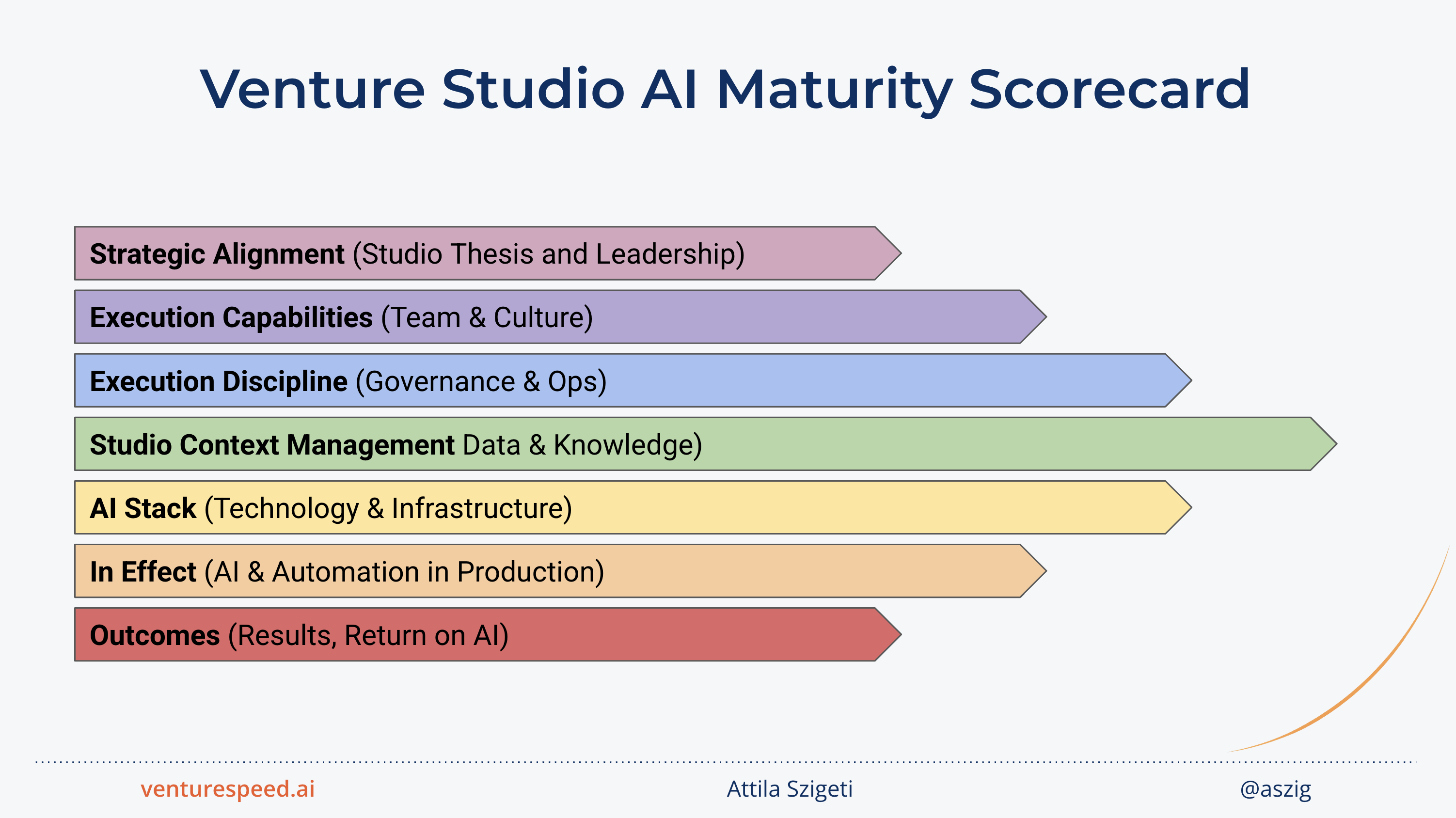

Seven Dimensions of AI Maturity: The Venture Speed Scorecard

“AI readiness” is your capacity to deploy AI safely tomorrow: skills, data, governance, and budget. “AI maturity” is how deeply AI already shapes decisions and value creation today. Readiness is the accelerator pedal; maturity is the current speedometer. Before you race ahead, you need an honest baseline.

While I wrote Venture Speed - a field reference guide that bridges strategy and engineering for non-technical studio leaders - I examined dozens of studio case studies. This included the two deep dives about Pioneer Square Labs and Builders and a dozen short studies. What I found are seven core dimensions that predict portfolio-level impact. The model is intentionally lean, so you can audit a complex studio in one working session and still surface the biggest levers for change.

1. Strategic Alignment (Studio Thesis and Leadership)

A studio’s success lives or dies by its thesis and co-founders. So does the AI implementation. This dimension examines whether AI is integrated into venture sourcing, investment criteria, and leadership actions. When the studio is scouting for ideas or co-founders, is “defensible AI edge” a gate-check or an afterthought? Is the "AI-driven" or "AI-native" tagline really justified or only a cosmetic enhancement in the pitch deck? Do partners personally drive AI initiatives; or do they outsource this to a single tech lead or 3rd party vendor?

Levels:

- AI absent from strategy, not part of thesis, no AI enablement from leadership.

- Cosmetic mentions of AI in studio pitch deck, ad-hoc actions from studio leaders.

- AI used increasingly in venture filtering and production, new norms are already forming.

- There is an organized effort to upgrade the whole studio playbook with AI components, and this is replicated into new ideas and ventures.

- Thesis and funnel fully automated around AI signals, studio leadership team leads by example.

2. Execution Capabilities (Team & Culture)

Even the sharpest thesis stalls without talent that can translate prompts into production. This dimension looks at the breadth of AI literacy, depth of specialist expertise, and cultural norms. Do you run prompt-jam sessions or internal AI sprints? Are your product and engineering teams encouraged to explore new tools? Are the open job descriptions updated with AI-agility expectations?

Levels:

- <20 % of staff use AI; no training, not enough AI engineering capacity to run refined, production-ready AI projects.

- 20–40 % informal use; ad-hoc sharing of learnings; enough engineering skills to create simple AI projects.

- 40–60 % use AI in their roles; training on AI tool use is included in the onboarding process; the engineering team is capable of deploying workflows, agents.

- 60–80 % active use; role-based upskilling available to the whole team; senior AI/ML engineers on board building production-grade AI systems.

- >80 % certified and active AI users; usage tracked and rewarded; the studio has an AI-native engineering team.

(Though not a studio, it's worth taking a look at how Zapier achieved almost 90% AI adoption. Link in the Sources section.)

3. Execution Discipline (Governance & Ops)

Great velocity without brakes is disaster. Governance includes naming owners for each AI project and tool, practical policies, safety checks, cadence of audits, and risk management. We score whether bias, privacy, and security are checked once at launch or continuously. Compensation signals also matter: when partner bonuses move with AI KPIs, discipline follows. Ultimately, governance should let teams ship faster because they trust the guardrails.

Levels:

- No sponsor, budget, or policy.

- Single "AI owner"; informal and improvised checks, ad-hoc and mainly reactive security measures.

- Clear AI project scoping guidelines on the studio level, default guardrails against hallucination and security vulnerabilities - not just in theory, but these are part of daily practices.

- Monthly board audits, governance principles replicated into each new project and spin-offs.

- AI governance KPIs studio-wide; real-time, centralized risk dashboards monitor all ventures with automated alerts.

4. Studio Context Management (Data & Knowledge)

AI eats data; poor data eats returns. This dimension covers how well the studio uses its data from discovery-ideation-validation. And also how easily the spin-offs can access shared datasets, documentation, and institutional memory. Is the studio extracting timely and relevant insight from previous validation data to improve new ideas? Can a new venture inherit cleaned, relevant data from the "studio brain" when needed? Are there version-controlled Ideal Customer Profiles, synthetic personas? The end-state is a secure, always-on “studio brain” feeding every project.

Levels:

- Data is siloed, low‑quality, and uncatalogued; knowledge is scattered.

- Core data is centralized with basic documentation and some backlog cleansing activities.

- A data catalog exists with metadata; a searchable knowledge base (early RAG) is available, and used during discovery - ideation - validation.

- A well-organized data vault serves the full venture production system, the individual projects and ventured; sensitive information is governed; knowledge is fully searchable for AI.

- Privacy‑enhanced, versioned pipelines (incl. synthetic data) continuously feed models; a secure “studio brain” serves all teams.

5. AI Stack (Technology & Infrastructure)

Tools help turn ideas into reliable, repeatable results. This dimension is about the tools used, how well those integrate into the whole venture production system. We check how well AI code (including prompts) is managed, updates are managed, how easy it is to monitor performance. And whether models can be swapped without stopping work or being stuck with one provider.

Levels:

- Local notebooks and prompt repositories only; no version control or monitoring.

- Git or similar repository is used for prompts/models; deploys are manual; minimal logs.

- There is a disciplined continuous improvement activity for prompts/workflows/agents. This evaluates existing and new models, manages rollouts and rollback. Switching between AI tools and models is cumbersome.

- Automated testing and alerts catch problems early, with smooth model switching and quick rollbacks to keep systems reliable.

- Agentic systems in place for most of the venture production stages. Switching between vendors can happen smoothly, minimal risk of vendor lock-in. Real-time monitoring of all AI agents in production.

6. In Effect (AI & Automation in Production)

Proof-of-concepts don’t pay the bills—live automations do. Here we count the number of AI tools (from prompts to agentic systems) that are in actual production and deliver measurable results. This can be slashing cycle time for ideation and validation, reducing manual effort, or unlocking new revenue. Depth matters: are automations isolated macros or fundamental parts of the zero-to-spin-off workflow? Top-tier studios use shared AI agents that every portfolio company taps from day one.

Levels

- No AI tools or automations are currently used in live production.

- A small number of AI systems deliver measurable time savings, reducing cycle times by about 10–20% in limited, isolated tasks.

- Multiple AI automations are in production, providing clear efficiency gains across several parts of the venture building process. Though still somewhat siloed.

- AI-driven automations cover most routine and repeatable tasks; shared AI agents support multiple teams and portfolio companies regularly. At this level "zero-to-MVP" or "zero-to-Minimum Viable Venture" takes 1-2 weeks.

- 80–90% of the venture studio’s “zero-to-spin-off” workflow is automated, with AI handling the grunt work reliably, and humans focusing on critical creative decisions.

7. Outcomes (Results, Return on AI)

Finally, we follow the money: how well impact is measured. Good studios log anecdotal wins; great ones run live dashboards that feed investment committees. When RoAI steers capital allocation, AI becomes a growth engine rather than a tech hobby.

Levels:

- Only anecdotes; no measurement.

- Pilots log time saved but no monetary conversion or cadence.

- The studio uses RoAI calculations to decide on AI tools and projects, metrics are frequently logged and these feed into decisions - on studio playbook upgrades and new ideas.

- A live multi‑KPI RoAI dashboard updates at least weekly and informs decisions, the studio has a disciplined process to budget for new AI initiatives and upgrades.

- RoAI is integral part of studio budgeting decisions, idea-venture go/no-go decisions, investor updates.

Now it's up to you—take seven to ten minutes to score your studio, dimension by dimension, and identify the gaps that matter most.

Give me 24-48 hours and I’ll follow this up with a 7-10-step improvement plan recommendation, based on your results. This will include 1-3 items addressing the dimensions that need the most improvements, so you can close your gaps.

But for now, let’s look at where your studio stands.

Interpreting Your Score

7 – 14 Foundational / At-Risk: Your studio is still AI-curious rather than AI-capable. Strategy, data, and governance are mostly ad-hoc. Next step: commit yourself to real AI adoption, draft a lightweight AI rollout plan, and ship a single Minimum Viable Automation to prove tangible value and rally the team.

15 – 20 Emerging / Early Stage: You have pockets of AI activity, but integration is inconsistent and RoAI invisible. Next step: centralise key datasets (start with ideation and validation), launch a governance cadence (quarterly works), and stand up a basic RoAI dashboard. Add realistic AI-agility expectations to job roles.

21 – 25 Progressing / Intermediate: AI is delivering repeatable wins and guard-rails are in place. Yet gaps remain—in depth and width of AI usage, risk management, AI impact on portfolio decisions. Next step: expand your AI engineering capabilities, widen the scope for implementation, give more room and resources to your team, add RoAI metrics to partner and LP meetings.

26 – 30 Advanced / Scalable & ROI-Driven: AI now strengthens core workflows; dashboards influence spend; culture makes it easier to implement more AI tools - with measurable effect! Next step: Expand and replicate these results into your portfolio companies; rethink your thesis and venture template so you create AI-native new ventures by design. Share your results, build up your brand and position yourself as an AI pioneer - to attract even more top talent to go further.

31 – 35 AI-Native Studio / Systemic Innovator AI shapes thesis, capital flows, and day-to-day ops; shared AI assets power most of venture creation. You’re setting the pace—if you keep innovating. Next step: keep up with the evolution speed of AI tools and frameworks. At this stage you are one of the very few studios that can truly call itself "AI-native" - this means you have internal tools that others might want to buy or licence. You have the chance to turn your venture studio system itself into a product, or multiple products.

Charting the Next 90 Days and Beyond

Ready to close those gaps and move up the ladder? Your scorecard reveals the gaps. Now let's close them with a roadmap that builds on successful real life cases and is customized to your studio.

Book your “Studio AI Strategy Session”: In 90 minutes, we'll review your current studio playbook and AI readiness, identify your three highest-impact quick wins, and map your 90-day transformation roadmap. You'll leave with a clear execution plan and confidence in your next steps.

Or if you prefer DIY, claim your copy of the full playbook: Get immediate access to Venture Speed with 12+ case studies, a comprehensive overview on studios and AI, and quick start guides for different operator types. KDP for regular reading; PDF to add to your knowledge repository and LLM.

Research & Case Studies

The primary insights for this scorecard came from Venture Speed, and I also added more insight from the following sources:

Bay Area tech CEO apologizes after product goes 'rogue'

From AI to digital transformation: The AI readiness framework

Hackers steal images from women's dating safety app that vets men

How Zapier rolled out AI org-wide: Our playbook to driving 89% adoption

McDonald’s AI Hiring Bot Exposed Millions of Applicants’ Data to Hackers

RoAI: How Smart Venture Studios Measure the Real Return on AI

Stay connected with news and updates!

Weekly briefing with actionable insight - about venture studios, for studio leaders and founders.

No spam. No noise. Just signal. Your info stays with us.